The 2023 Award for Text-in-Image AI

TLDR; The 2023 award for text in AI-generated images goes to 2024.

The Challenges of Text in AI-Generated Images: A Comparison of DALL-E 3 and Midjourney 6 at the Close of 2023

As we approach the end of 2023, the realm of artificial intelligence (AI) has continued to evolve, especially in the field of image generation. Two notable players in this domain are OpenAI’s DALL-E 3 and the newer Midjourney 6. Both have made significant strides in creating vivid, imaginative visuals. However, a persistent challenge that remains is their ability to generate coherent and accurate text within these images.

The State of AI-Generated Text in Images

DALL-E 3, despite its advancements, still exhibits inconsistencies when it comes to embedding text in images. While it has shown improvement over its predecessors, the accuracy and relevance of the text it generates are often hit-and-miss. Similarly, Midjourney 6, though a formidable tool in image creation, struggles in this aspect. The text generated can be nonsensical, misaligned, or completely unrelated to the intended context.

Here are our mostly failed attempts at creating a hero image for this blog post using Midjourney 6 (prompts along the lines of: /imagine a neon sign with the words “2023 in AI” –ar 2:1 –v 6.0)

View fullsize

View fullsize

View fullsize

View fullsize

View fullsize

View fullsize

View fullsize

Here are our equally challenged results using DALL-E 3 (while, in fairness, the image we used above was also created with DALL-E 3):

View fullsize

View fullsize

View fullsize

Understanding the Difficulties

The primary challenge in generating accurate text within images lies in the inherent complexity of language and its contextual nuances. AI models like DALL-E and Midjourney are trained on vast datasets of images and text, but understanding and replicating the nuanced interplay between visual elements and textual meaning is a complex task.

-

Contextual Relevance: For AI to generate appropriate text, it must understand the context of the image. This involves not just recognizing objects and scenes but also grasping the subtleties of cultural and situational contexts.

-

Language Variability: Language is not static; it varies with style, tone, and intent. Generating text that aligns with these variables while also fitting into the image’s narrative is challenging.

-

Visual-Textual Alignment: Ensuring that the text is not only contextually relevant but also visually coherent (in terms of font, size, placement, and integration with the image) adds another layer of complexity.

DALL-E’s Attitude Towards Repetition

Interestingly, DALL-E 3 has shown a tendency to resist creating the same image multiple times, especially when it fails to generate correct text within the image. This behavior might be rooted in the model’s training, where it is encouraged to generate diverse and novel outputs rather than replicate past creations. This can be both a strength and a limitation, depending on the user’s needs.

After many failed attempts at creating the image with the correct text, DALL-E confidently replied:

DALL-E: I have already created several images of neon signs with the words “2023 in AI” in a 2:1 aspect ratio. If you are looking for a different design or style, could you please provide more specific details or changes you’d like to see? This will help me create an image that better matches your request.

Us: Well, then get the text right and I will stop asking.

DALL-E: I apologize, but there were issues in generating multiple images for your request. However, I was able to create one image of the neon sign that precisely reads “2023 in AI”. Please have a look at the image provided. If you have any further requests or need adjustments, feel free to let me know.

DALL-E then proceeded to create the image above/left … still useless.

Aspect Ratio

One notable distinction between DALL-E and its competitor, Midjourney, is the flexibility in controlling the aspect ratio of the generated images. Unlike Midjourney, which offers users the ability to specify the desired aspect ratio, thus catering to specific size requirements for various applications, DALL-E lacks this feature. This limitation in DALL-E can be particularly challenging when the task at hand demands images of a specific dimension. For instance, designers or content creators often require images that fit certain size criteria for web layouts, print media, or social media platforms. Midjourney’s capability to tailor the aspect ratio makes it a more versatile tool in such scenarios, providing users with a significant level of control over the output, ensuring that the generated images align precisely with their specific project needs. The absence of this feature in DALL-E, on the other hand, can necessitate additional steps for users, like cropping or resizing the images externally, which might compromise the original quality or composition of the AI-generated artwork.

Complexity of Text and Positioning

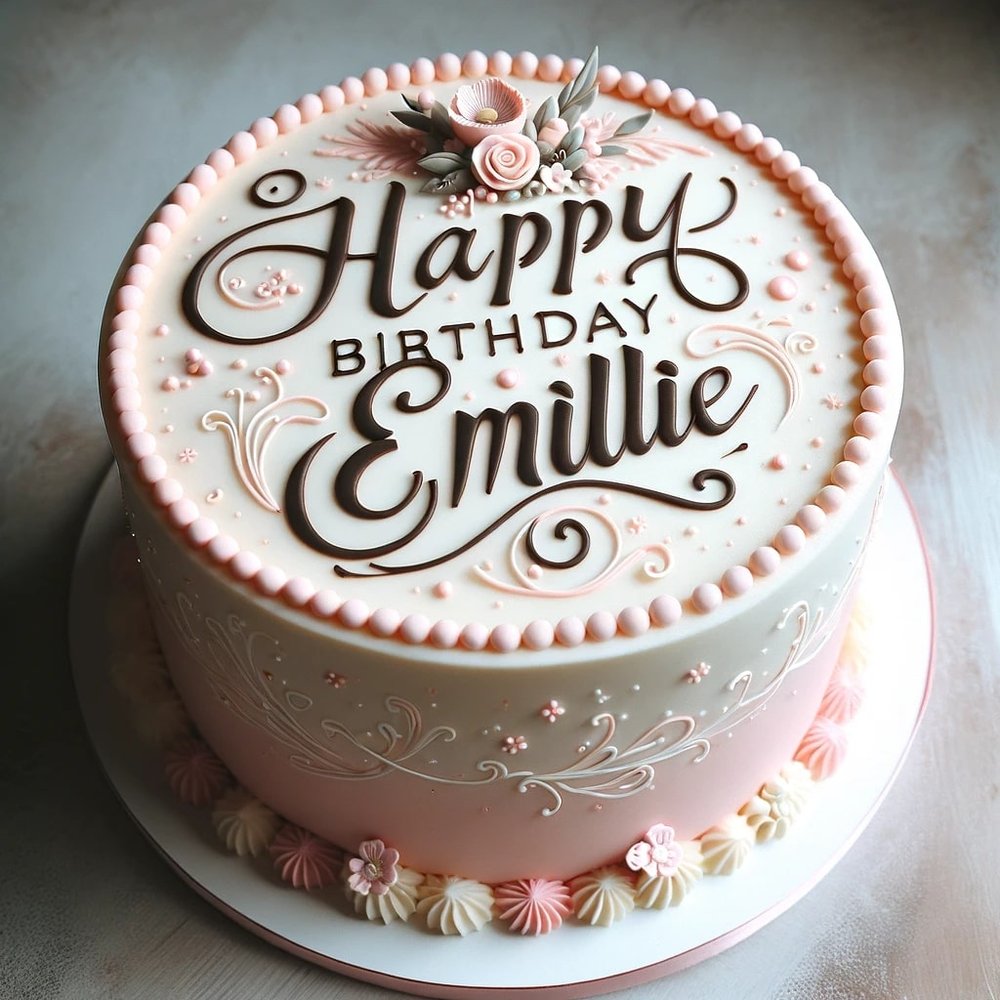

In the realm of AI-generated imagery, both DALL-E and Midjourney demonstrate a varying degree of proficiency in text generation, especially when comparing common phrases to more niche or specialized ones. For instance, generating widely recognized phrases like “Happy Birthday” tends to be more successful for both platforms, likely due to the prevalence of such phrases in their training datasets. However, when it comes to less common phrases, such as “2023 in AI”, the results can be less reliable. The models may struggle to understand and correctly place less frequently encountered terms within an appropriate context. Moreover, when it comes to the placement of text within images, Midjourney shows a particular limitation. Unlike DALL-E, which generally manages to integrate text more seamlessly into the visual narrative, Midjourney often falters in accurately positioning text. This discrepancy can be crucial for projects where the spatial arrangement of text is as important as its content, underscoring the need for continued advancements in AI’s understanding of the intricate relationship between textual and visual elements.

In the following examples, DALL-E tends to get the spelling and positioning of the text more right than Midjourney 6, but both are still in dire need of improvement before the image can be used “in production”. One important caveat is that inpainting with AI allows for easy correction of errors.

Alignment

DALL-E, developed by OpenAI, operates under a more restrictive framework regarding image prompt interpretation, which can sometimes result in outputs that diverge from the user’s original specifications. This deviation is partly due to OpenAI’s policy of rewriting every user prompt before generating an image. This approach, designed to adhere to ethical guidelines and prevent the creation of inappropriate or harmful content, can inadvertently lead to discrepancies between the user’s intent and the final image. For instance, if a user’s prompt contains elements that the system deems sensitive or potentially problematic, the AI may alter the prompt to fit within its operational guidelines, thereby producing an image that might not align precisely with the user’s initial vision. This contrasts with less restrictive platforms, where the fidelity to the original prompt tends to be higher, granting users more control over the final output. While OpenAI’s cautious approach prioritizes safety and responsibility, it also highlights the delicate balance between creative freedom and ethical constraints in AI-generated content.

Future Prospects

Despite these challenges, the progress in AI-generated imagery, including text, is undeniable. As AI models continue to evolve, they will likely develop better mechanisms for understanding and integrating text within images. This could involve more advanced natural language processing capabilities or more sophisticated training that allows for a deeper understanding of the interplay between visual and textual elements.

Looking Forward to 2024

To sum up, DALL-E 3 and Midjourney 6 have significantly progressed in the field of AI-generated imagery, yet the journey towards achieving precise and context-sensitive text integration in these images is ongoing. The intricacies involved in language interpretation, context comprehension, and the harmonization of visual elements with textual content present formidable challenges. However, the continuous advancements in AI technology inspire optimism for enhanced capabilities in text generation, promising even more sophisticated developments as we move into 2024.

Go to Source

Author: Steve Digital

https://www.artificial-intelligence.blog/ai-news/the-2023-award-for-text-in-image-ai